My thoughts about On Intelligence by Jeff Hawkins

Introduction #

In this post, I will summarize the key ideas of Jeff Hawkins’s book in case I forget the details and I will offer my own thoughts of Hawkin’s memory-prediction framework. I was very intrigued with the ideas proposed in this book.

Summary #

Chapter 1 & 2: The distinction between Machine Learning and a brain-based approach to AI #

Hawkins proposes that we cannot measure the intelligence of system only by its output, alluding to the Chinese Room thought experiment as an example. As René Descartes said, “I think, therefore I am.” An intelligent machine is characterized by its ability to think, not necessary by its output.

Hawkins elaborates that ML/AI right now does not represent “intelligence” or what it means. Hawkins proposes that intelligence is due to the stucture of the cortex, explaining the role of functionalism in inteliigence.

Chapter 3 & 4 #

We get our first introduction to the cortex and Montecastle’s theories regarding its structure. Montecaste observed that various areas of the cortex (despite being responsible for various things) are structured very similarly, and therefore proposes that these regions are all processing one common ‘cortical algorithm’

Delving into this algorithm, we see that the neocortex

- stores sequences of patterns

- recalls correct patterns auto-associatively (can recall patterns even with garbled input)

- stores patterns in an invariant-form

- stores patterns in a hierarchy

Chapter 5 & 6: The memory-prediction framework #

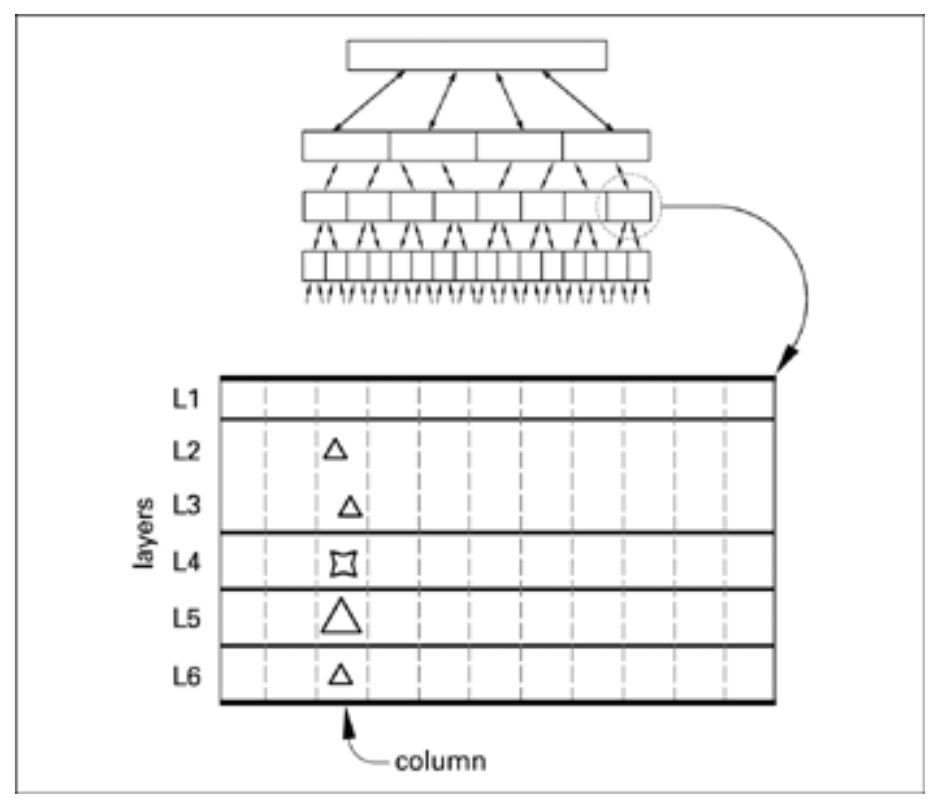

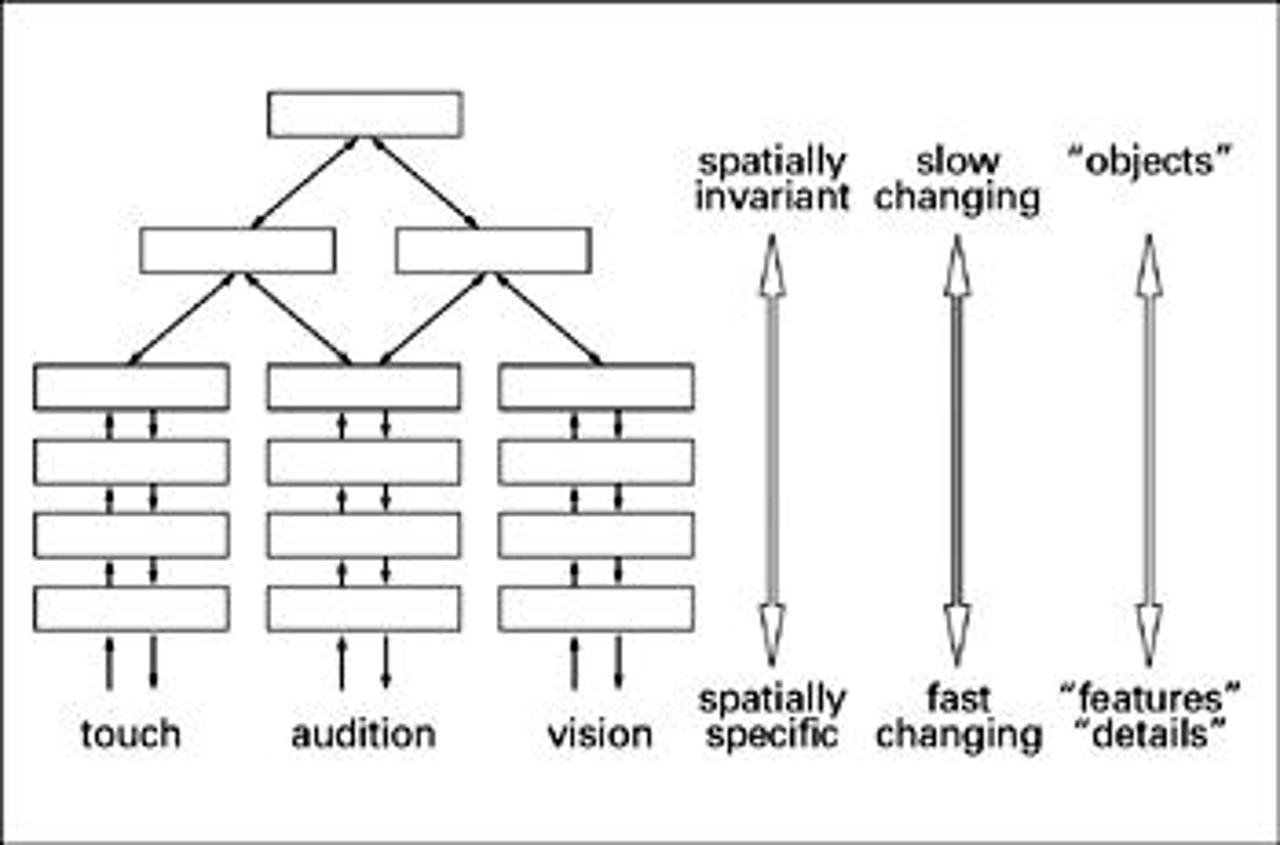

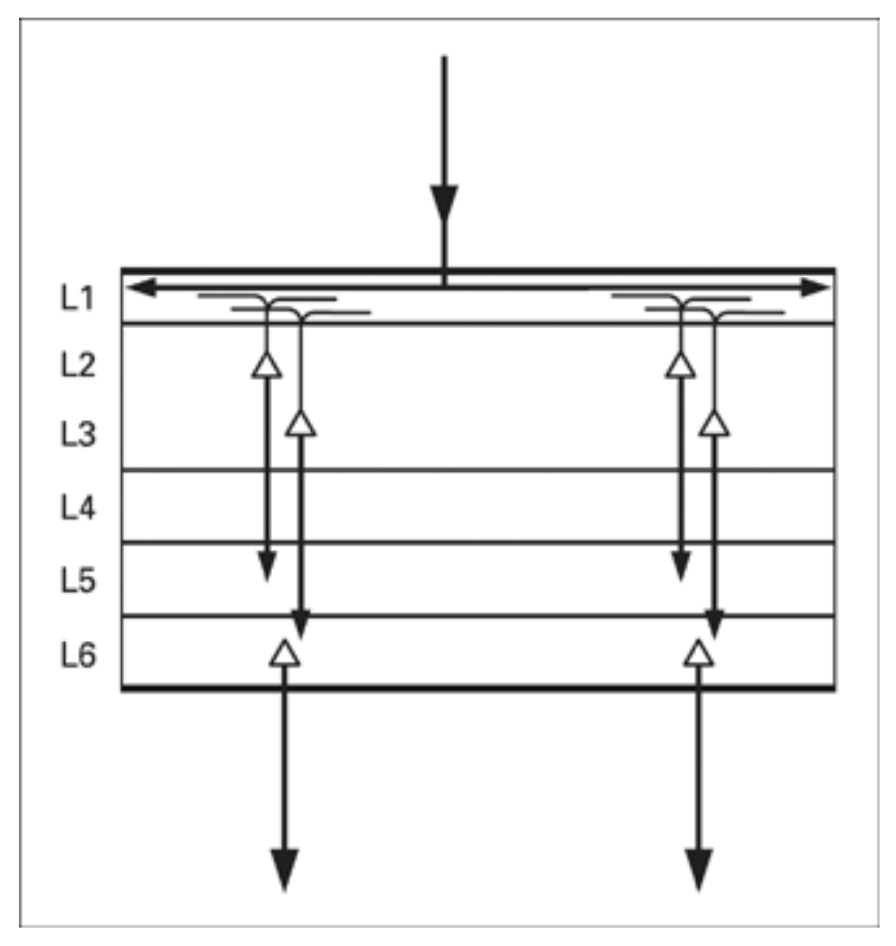

In these chapters, Hawkins flushes out his complete theory: Hawkins establishes that the cortex is organized as a bi-directional hierarchy of regions. Each region is composed of cortical columns which each comprise 6 layers of cortical cells.

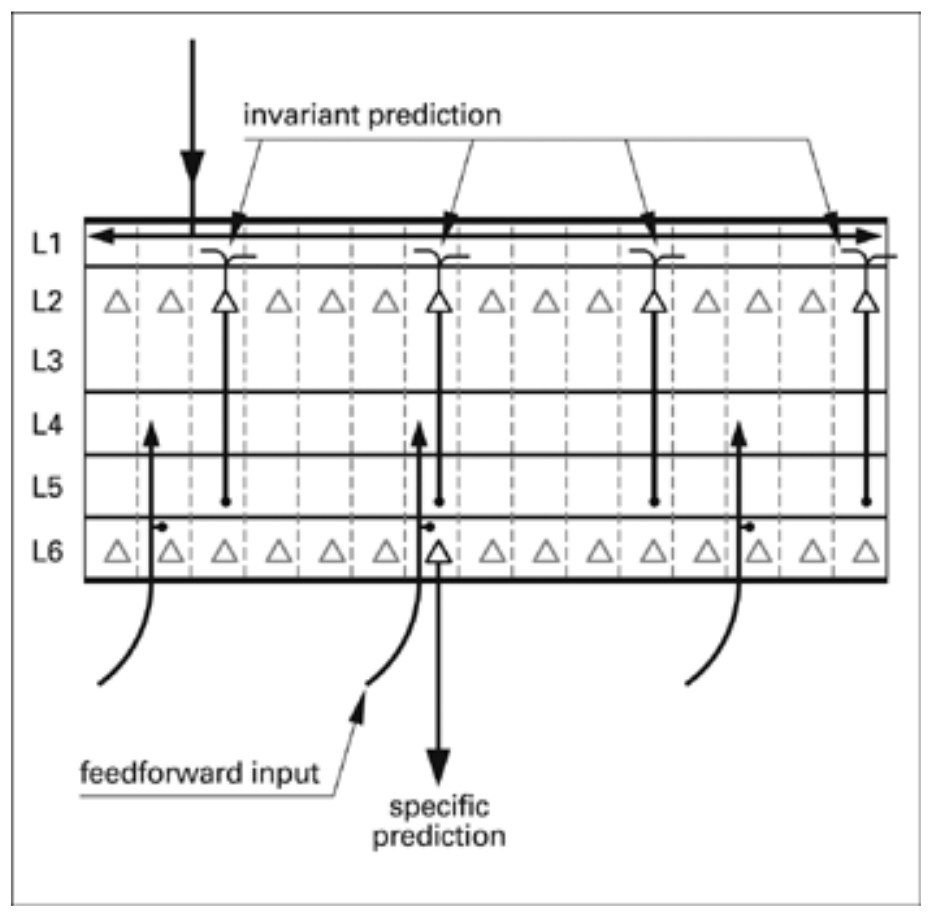

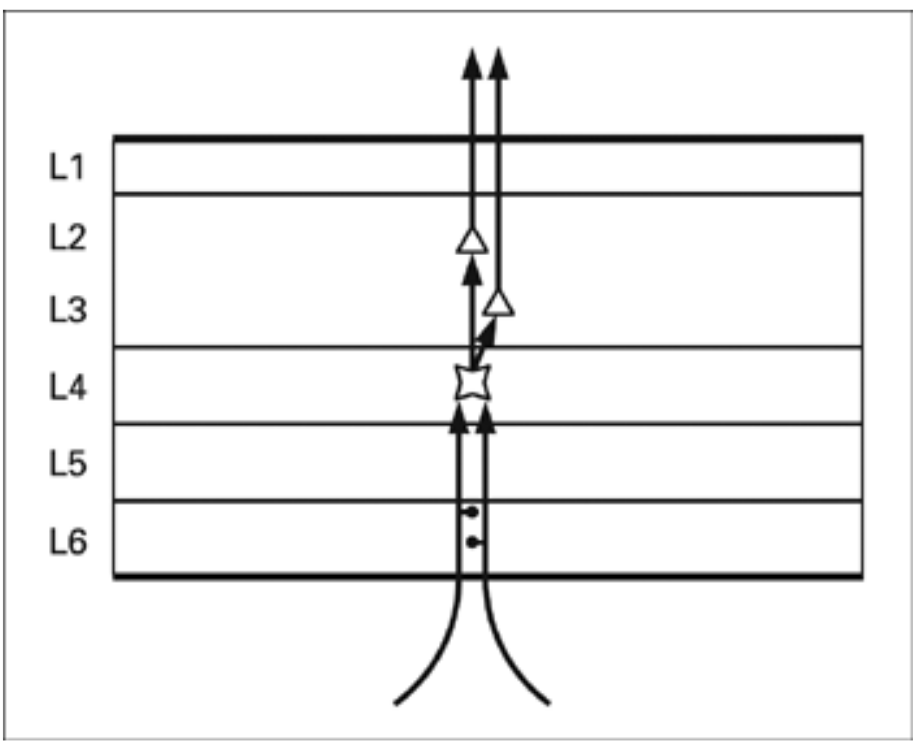

Bottom-up inputs to each ‘region’ are the ’names’ of patterns detected by the lower region or raw input patterns from senses. Inputs from higher regions represent expectations or predictions of what the lower regions should be recieving shortly based off what they have recived previously. These predictions arrive at cells in Layer 4 and travel up to cells in Layer 2 and 3 where they are recognized/handled. From this, we see that:

- Each regions predicts what the region below it should be recieving soon based on what it has recieved (which it communicates to its parent region via ‘pattern names’ or raw patterns. This is sort of a little ‘heads up’.

- Each region remembers frequent patterns and generates names for these patterns, which provides abstraction.

- Layer 2 and Layer 3 cells have axons in the next higher regions. The activity of these cells determines the inputs for the higher region, which should be the name of the detected input which will be a constant pattern.

Thus, the inputs from lower regions into Layer 4 are converted into a name in Layers 2 and 3 and passed on.

- Layer 2 cells represent the name of the sequence and fire during learned dequences. Layer 3b cells cells fire when a column becomes active without getting the heads up first. Thus, as the column learns, the Layer 3b cells become quieter.

- When a region recieves a prediction, it checks whether the next pattern it recieves matches the prediction from its parent region. If it does not, it forwards the patterns up the hierarchy, rather than the name. Thus, our cortex automatically brings to attention patterns that it did not expect, such as a misplaced object in the wrong room.

- The hippocampus is located at or near the top of the cortical pyramid. New inputs that make it to the top are formed into new memories. As unusual patterns are experienced over and over, these memories are reformed at lower regions than the hippocampus.

What does this mean as a whole? #

This results in some interesting properties. Certain cortical columns are concerned with specfic details of senses, such as touch input, from a simple finger, or a certain area of vision. These cortical columns may ’light up’ or become active when something specific, such as a sharp edge, is seen in a certain area. However, as we travel up the hierarchy, we see that some areas light up whenever something is visible in any region, such as a certain face. Data becomes more abstract as it travels up the hierarchy.

Furthermore, the cortex stores patterns invariantly, meaning that higher regions are not concerned with the details of certain ideas. If the cortex wants to perform an action, it sends an invariant representation that represents that action down to the Layer 1 of lower regions. Cortical columns in lower regions take care of recalling the specfic muscle movements related to the input pattern. Similarly, English can be either spoken, typed, or written. However, higher regions only think of the words, and lower regions take care of transforming the invariant representations of the language intro specific finger movements or vocal sounds.

Chapters 7 & 8 #

Hawkins addresses subtopics of intelligence such as conciousness, creativity, and imagination. One notable idea of this chapter that stuck out to me was Hawkins’ view that imagination was simply the cortex predicting the outcomes of actions. Hawkins also proposes that reality is subjective because it depends on how accurate our cortical model of the world is.

Hawkins also lays out some expectations of Artificial Intelligence for the future, noting several advantages of artificial inteliigence over biological intelligence. Specifically,

- Speed: Silicon is much faster than biological nuerons, so artifical cortexes would likely be faster than us at processing information

- Capacity: Artificial cortexes can be expanded with more ‘memory’ while human memory is limited.

- Replicability: We can easily transfer data between systems and duplicate such data

- Sensory input: We can add whatever sensors we need and can feed in data from the world.

Finally, Hawkins also explains why AI is unlikely to go ‘runaway’. The cortex cannot experience emotions on its own, but it will only have the human intuition we can use as a tool. Our AIs will not have the desire to be ‘free’

My thoughts #

I was intrigued by existing concepts of memory in current ML techniques. For example, when Hawkins explains how auto-associative memories are formed by feeding the output of a group of columns back in as an input to a region in Layer 1, I was reminded of autoregressive models.

Autoregressive models are models with a property such as that an output ht depends on xt and also x from prior timesteps. In RNNs and LSTMs, this information is passed in via a hidden state. This allows for RNNs and LSTM build memory and work well with patterns, although this is a shallow imitation of Hawkins’ idea.

I thought that the ideas proposed by Hawkins in his book were mostly unparalleled in machine learning and were very thought-provoking. I considered how someone could implement the neocortex in computers. I realized that the brain automatically tunes itself to interpret the inputs it receives. For example, George M. Stratton, an American psychologist, wore glasses that inverted his vision for several days. After dealing with nausea, he was able to function normally with his inverted vision at around the 7th day. I imagine that the cortex is able to adapt and make sense of brand-new patterns, so when designing digital cortexes, a cortex will automatically tune itself to its inputs.

Images taken from On Intelligence by Jeff Hawkins and Sandra Blakeslee